How CJE Plans Sample Size and Budget

This is a high-level, practical guide to how CJE recommends oracle vs surrogate allocation. The goal is simple: stop guessing, hit your decision target, and spend budget where it actually improves confidence.

TL;DR

- You can start with no data: run simulation-based planning first, then refine with pilot data when available.

- Then pick your planning mode: fixed budget or target MDE.

- CJE returns a concrete plan: recommended total samples, oracle labels, and expected detectable effect size.

Who this is for: teams evaluating model/prompt/policy variants where oracle labels are expensive and judge scores are cheap.

New here?

This post assumes you know why calibrating AI judges matters. If not, start with the flagship post.

Your AI Metrics Are Lying to You →Want the math?

The Square Root Law derivation and formal variance decomposition.

CJE paper, Appendix I →Want to run this workflow end-to-end?

Open planning notebook in Colab →What CJE planning solves

Every evaluation that uses judge scores faces one budgeting question: how much spend goes to oracle labels versus cheap surrogate labels?

If you under-invest in oracle labels, you get false certainty. If you over-invest, you lose scale. CJE planning gives a concrete recommendation instead of a guess.

The workflow is practical: run a pilot, choose either a fixed budget or target MDE, and let CJE return a recommended sample plan.

Why CJE needs both oracle and surrogate labels

CJE balances two uncertainty sources:

In practice, this is why adding only cheap judge scores often fails to tighten confidence enough: calibration error can dominate at low oracle coverage.

Practical warning

Many frameworks under-account for calibration uncertainty. That can produce narrow confidence intervals that look precise but are not decision-safe. In our Arena benchmark, naive CIs on uncalibrated scores achieved 0% coverage.

How CJE recommends the allocation

CJE combines pilot-estimated uncertainty with your cost model, then recommends an oracle/surrogate split that maximizes decision power for budget.

Practical intuition: oracle labels are expensive but high-leverage; surrogate labels are cheap and broad. Good plans balance both instead of maximizing one side.

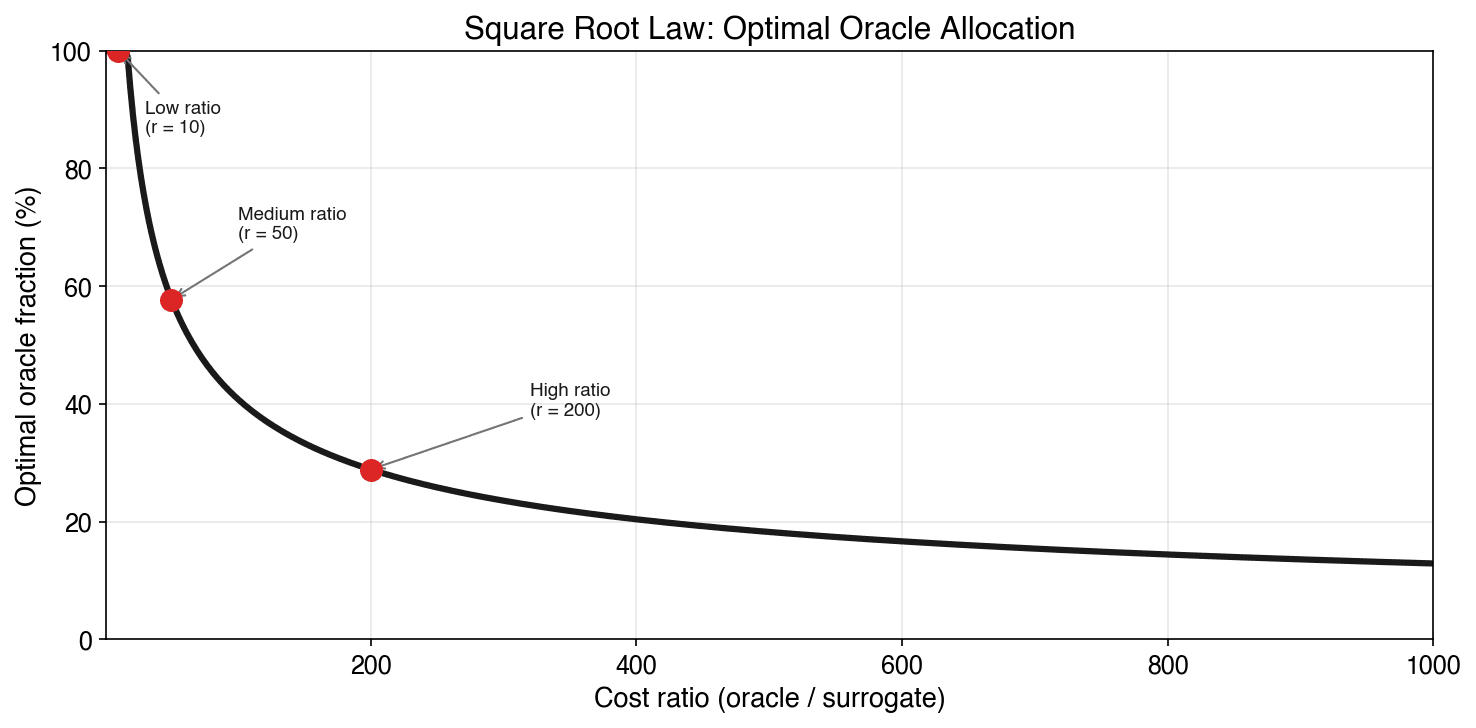

Optimal oracle fraction as a function of cost ratio. Red dots show representative cost-ratio scenarios; at a 200× ratio, the optimal oracle fraction is still about 29%.

What this means in practice

Table scrolls horizontally on mobile.

| Budget | Total Samples | Oracle Labels | Oracle % | MDE |

|---|---|---|---|---|

| $100 | 170 | 49 | 28.8% | 28.5% |

| $500 | 851 | 245 | 28.8% | 12.8% |

| $1,000 | 1,702 | 491 | 28.8% | 9.0% |

| $5,000 | 8,512 | 2,457 | 28.9% | 4.0% |

| $10,000 | 17,025 | 4,914 | 28.9% | 2.9% |

Assumes surrogate cost = $0.01, oracle cost = $2.00 (200× ratio), 80% power, α=0.05. MDE is for pairwise policy comparison. Your numbers will differ, so start with simulation and then fit your own σ² components from pilot labels.

Treat these numbers as an example, not a universal constant. The right split depends on your domain and pilot data.

Pick an operating point, not a magic number

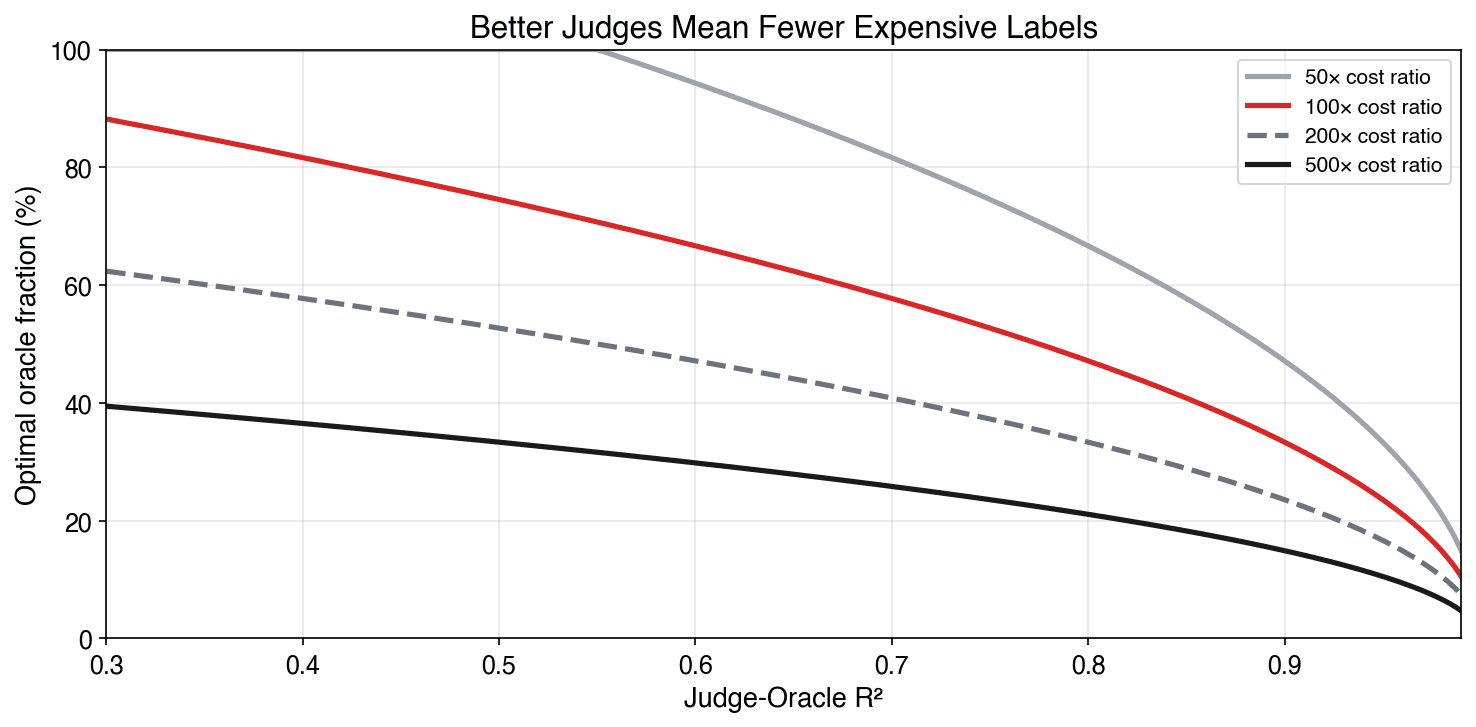

The 29% oracle fraction above assumed R² = 0.85 and a 200× cost ratio. Change either input and the optimum shifts dramatically.

Each curve is a different cost ratio. Read across: as your judge improves, the oracle fraction you need drops toward zero. A perfect judge (R² = 1) needs no oracle labels at all.

Find your judge's R² on the x-axis and your cost ratio among the curves — that gives you a starting point. Then validate with pilot data.

Practical workflow: simulate first, refine with pilot

The planning notebook supports a no-data start. Simulate from plausible judge quality and costs, then upgrade to pilot-fitted planning once you collect real labels.

CJE planning workflow

- Quick planning (no data): set costs and run

simulate_planningorsimulate_variance_model. - Choose mode: fixed budget (

plan_evaluation) or target MDE (plan_for_mde). - Optional refinement: fit variance from pilot labels with

fit_variance_model. - Execute: run the full eval at the recommended

(n, m)split.

Want formulas and API-level details? Skip to Technical details (optional) at the bottom.

Failure modes that break decisions

Too few oracle labels

Around 50 oracle labels usually means huge calibration uncertainty and weak decision power.

All surrogate, no calibration

You get narrow intervals around a potentially biased estimate. Precision without validity is a trap.

All oracle, no scaling plan

It works once, but it does not scale across many policies. Hybrid plans keep quality while controlling cost.

No pilot yet? Use a conservative sweep

This is exactly what the planning notebook does in Step 1: start with rough judge-quality assumptions and sweep scenarios before spending on pilot labels.

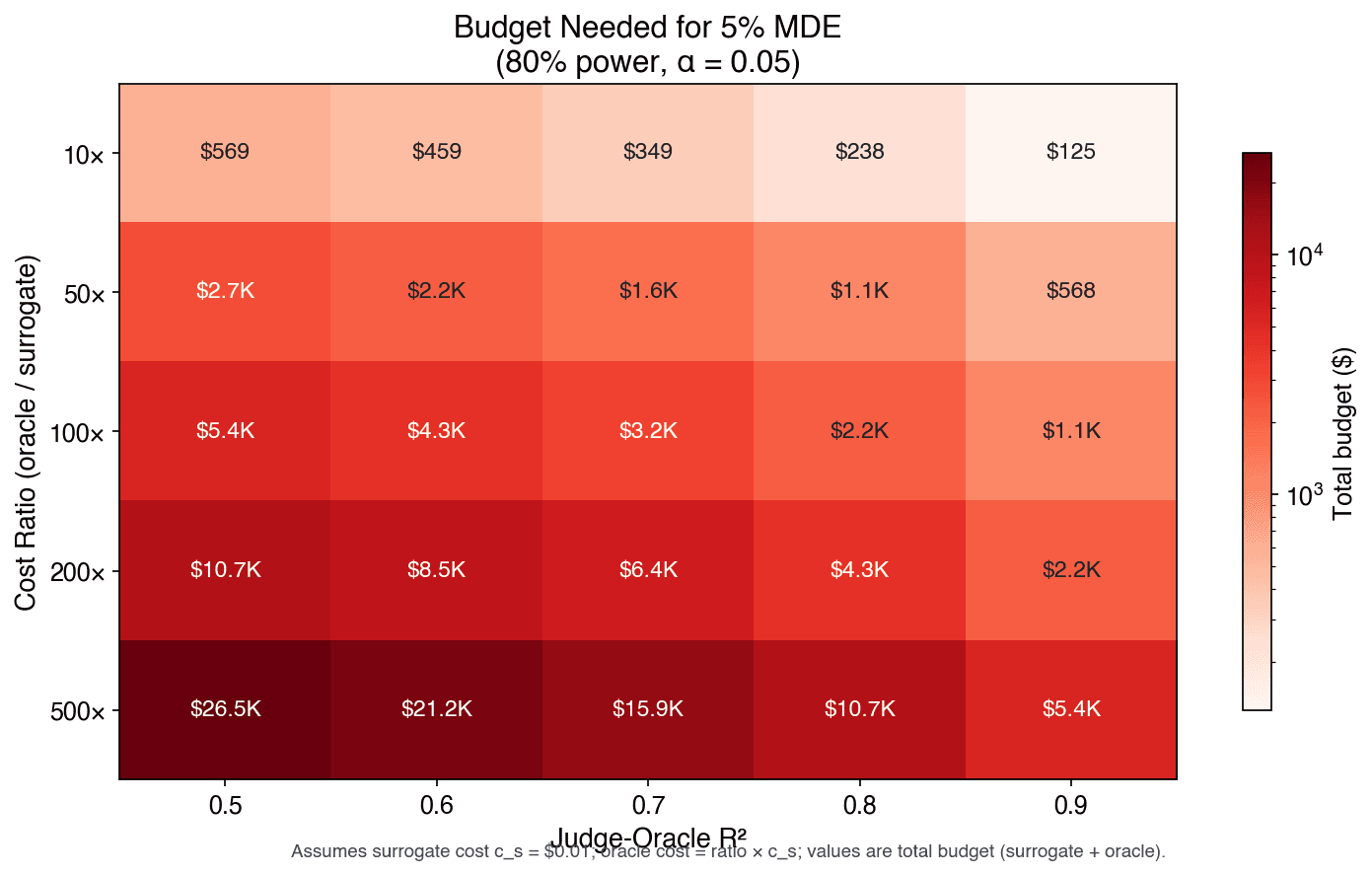

Ballpark total budget for 5% MDE (80% power, alpha=0.05) across judge quality and cost ratios. Assumes surrogate cost = $0.01 and oracle cost = (ratio × surrogate cost).

Rule of thumb

At cost ratio 200x and judge R² around 0.7, expect roughly mid-four-figure budget to detect a 5% delta. Improve judge quality first if budgets are tight.

Technical details (optional)

If you want the formal decomposition and APIs, this is the compact version CJE uses.

Variance decomposition

Var(θ̂) = σ²_eval / n + σ²_cal / m

CJE estimates these terms from pilot data, then solves allocation under your cost model.

Square Root Law

m* / n* = √( σ²_cal · c_S ) / √( σ²_eval · c_Y )

Oracle fraction scales with the square root of uncertainty and cost terms, not linearly with cost alone.

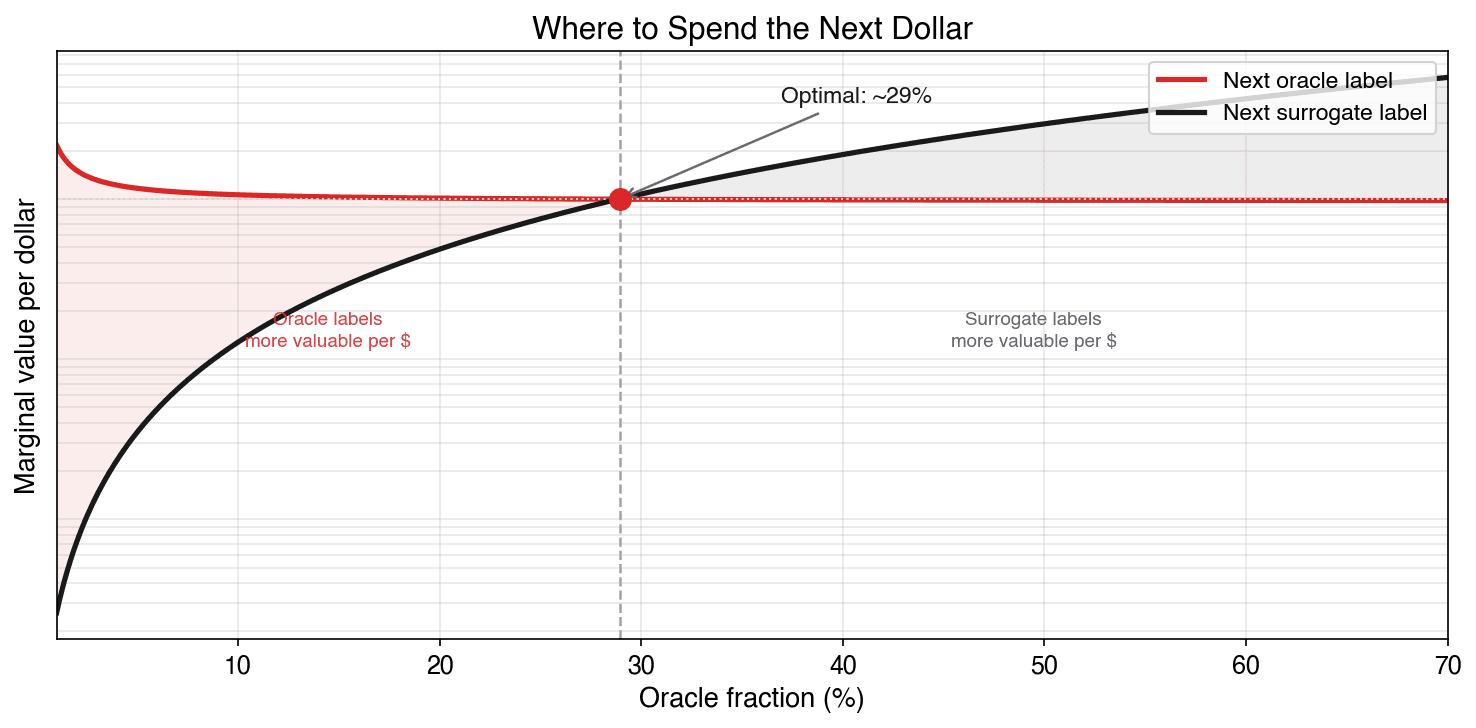

Intuition: equalizing marginal value

The Square Root Law falls out of a simple principle: at the optimum, the last dollar spent on oracle labels and the last dollar spent on surrogate labels reduce variance by the same amount. If oracle labels are still higher-leverage per dollar, you should buy more of them (and vice versa).

At low oracle fractions, each oracle label is far more valuable per dollar than each surrogate label. The curves cross at ~29%, the optimal allocation. Past that point, surrogates give more bang for the buck.

Code block scrolls horizontally on mobile.

# Python - planning core

from cje import (

fit_variance_model,

plan_for_mde,

plan_evaluation,

simulate_planning_sweep,

CostModel,

)

model = fit_variance_model(pilot_data, verbose=True)

cost = CostModel(surrogate_cost=0.01, oracle_cost=2.00)

plan = plan_for_mde(target_mde=0.05, variance_model=model, cost_model=cost)

plan_budget = plan_evaluation(budget=1000, variance_model=model, cost_model=cost)

sweep = simulate_planning_sweep(

r2_values=[0.5, 0.7, 0.9],

budget=5000,

cost_model=cost,

)Bottom line

Label budgeting is not a dark art. It is a solvable optimization problem. Run a pilot, estimate your variance components, and let the planning tools give you the highest-precision allocation per dollar.

The most common mistake is still zero oracle labels. The second is token oracle coverage (<100 labels) with inflated claims. Both create false certainty and bad launch decisions.

Start planning your evaluation

Install CJE and run the planning tools on your own data. The pilot takes a day; the planning takes minutes.

We welcome your feedback

CJE is actively evolving. If you have suggestions, find errors, or want to share your experience using the planning tools on real data, we'd love to hear from you.